The Truth About Predictive Policing and Race

Sunday, the New York Times published a well-meaning op-ed about the fears of racial bias in artificial intelligence and predictive policing systems. The author, Bärí A. Williams, should be commended for engaging the debate about building “intelligent” computer systems to predict crime, and for framing these developments in racial justice terms. One thing we have learned about new technologies is […]

Sunday, the New York Times published a well-meaning op-ed about the fears of racial bias in artificial intelligence and predictive policing systems. The author, Bärí A. Williams, should be commended for engaging the debate about building “intelligent” computer systems to predict crime, and for framing these developments in racial justice terms. One thing we have learned about new technologies is that they routinely replicate deep-seated social inequalities — including racial discrimination. In just the last year, we have seen facial recognition technologies unable to accurately identify people of color, and familial DNA databases challenged as discriminatory to over-policed populations. Artificial intelligence policing systems will be no different. If you unthinkingly train A.I. models with racially-biased inputs, the outputs will reflect the underlying societal inequality.

But the issue of racial bias and predictive policing is more complicated than what is detailed in the op-ed. I should know. For several years, I have been researching predictive policing because I was concerned about the racial justice impacts of these new technologies. I am still concerned, but think we need to be clear where the real threats exist.

Take, for example, the situation in Oakland, California described in the op-ed. Ms. Williams eloquently writes:

It’s no wonder criminologists have raised red flags about the self-fulfilling nature of using historical crime data.

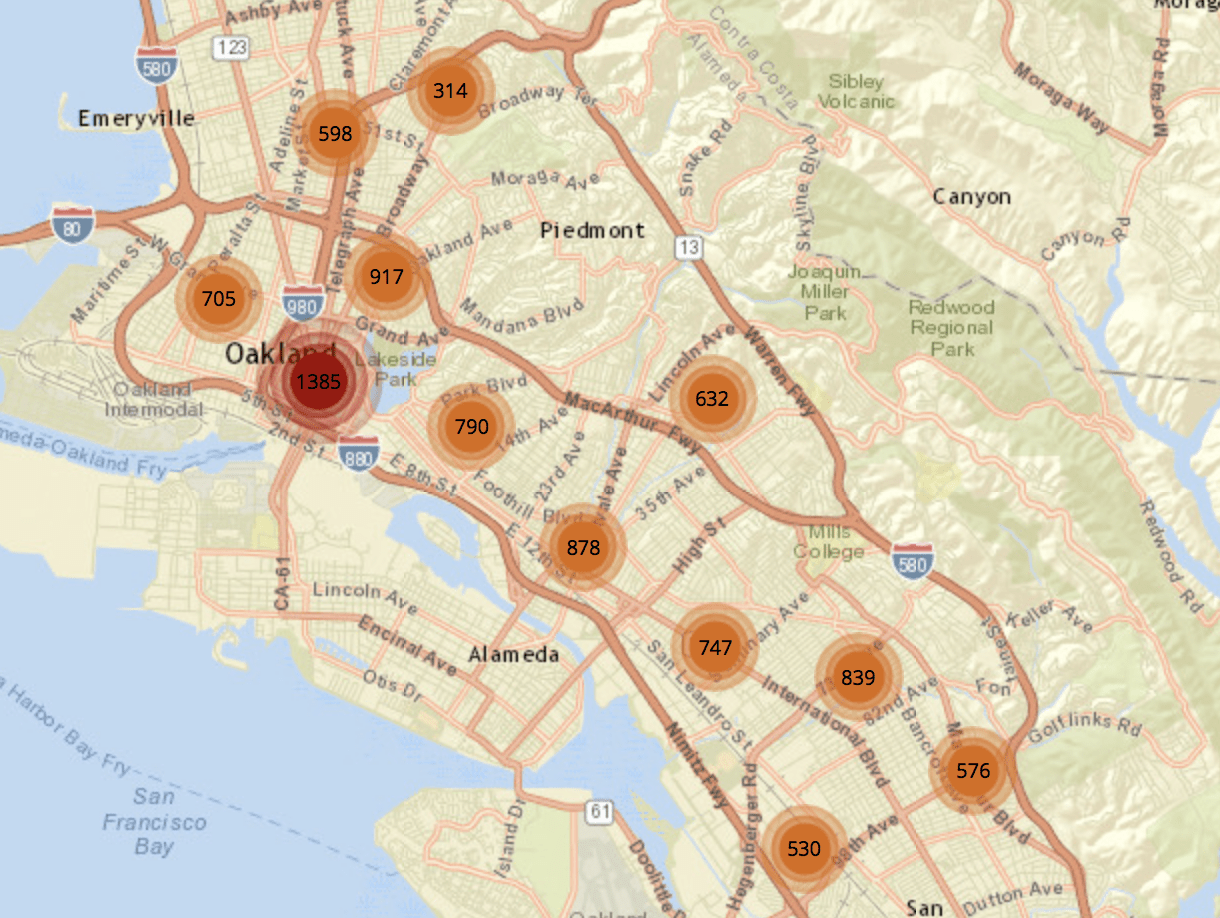

This hits close to home. An October 2016 study by the Human Rights Data Analysis Group concluded that if the Oakland Police Department used its 2010 record of drug-crimes information as the basis of an algorithm to guide policing, the department “would have dispatched officers almost exclusively to lower-income, minority neighborhoods,” despite the fact that public-health-based estimates suggest that drug use is much more widespread, taking place in many other parts of the city where my family and I live.

Those “lower-income, minority neighborhoods” contain the barbershop where I take my son for his monthly haircut and our favorite hoagie shop. Would I let him run ahead of me if I knew that simply setting foot on those sidewalks would make him more likely to be seen as a criminal in the eyes of the law?

These are honest fears.

If, as the op-ed suggested, Oakland police used drug arrest statistics to forecast where future crime would occur, then its crime predictions would be as racially discriminatory as the arrest activity. In essence, the crime prediction simply would be replicating arrest patterns (where police patrol), not drug use (where people use drugs). Police patterns might, thus, be influenced by socio-economic and racial factors — not the underlying prevalence of the crime. This would be a discriminatory result — which is why it is quite fortunate that Oakland is doing no such thing. In fact, the Human Rights Data Analysis Group (HRDAG) report that Ms. Williams cites is a hypothetical model examining how a predictive policing system could be racially biased. The HRDAG researchers received a lot of positive press about their study because it used a real predictive policing algorithm designed by PredPol, an actual predictive policing company. But, PredPol does not predict drug crimes, and does not use arrests in its algorithm, precisely because the company knows the results would be racially discriminatory. Nor does Oakland use PredPol. So, the hypothetical fear is not inaccurate, but the suggestion that this is the way predictive policing is actually being used around Oakland barbershops is slightly misleading.

Do not misunderstand this to be a minimization of the racial justice problems in Oakland. As Stanford Professor Jennifer Eberhardt and other researchers have shown, the Oakland Police Department has a demonstrated pattern of racial discrimination that impacts who gets stopped, arrested, and handcuffed — and which suggests deep systemic problems. But, linking real fears about racially unfair policing to hypothetical fears about predictive technologies (which are not being used as described) distorts the critique.

Similarly, the op-ed singles out HunchLab as a company which uses artificial intelligence to build predictive policing systems:

These downsides of A.I. are no secret. Despite this, state and local law enforcement agencies have begun to use predictive policing applications fueled by A.I. like HunchLab, which combines historical crime data, moon phases, location, census data and even professional sports team schedules to predict when and where crime will occur and even who’s likely to commit or be a victim of certain crimes.

The problem with historical crime data is that it’s based upon policing practices that already disproportionately hone in on blacks, Latinos, and those who live in low-income areas.

If the police have discriminated in the past, predictive technology reinforces and perpetuates the problem, sending more officers after people who we know are already targeted and unfairly treated.

This statement certainly has accurate and concerning elements to it. Systems designed on past crime reports (not just arrests) will focus police on poor communities of color in a way that might exacerbate over-policing. This self-fulfilling prophesy problem is something I and others have flagged as it relates to constitutional law, racial bias, and distortions of police practices. It is a worthy concern that directly impacts concerns about racial inequality.

But, here are two cautions to the op-ed’s analysis. The first is the need to be precise in identifying what technology you are critiquing. HunchLab is a place-based predictive policing technology. HunchLab does not do any person-based predictions, so the claim that HunchLab predicts “even who’s likely to commit or be a victim of certain crimes” is wrong and conflates place-based technologies with person-based systems like the Chicago Police Department’s “strategic subjects list” (a.k.a. the “heat list”). They are different systems with different concerns. Place-based predictive policing technologies (which forecast sites of criminal activity) and person-based predictive systems (which forecast individuals at risk for violence) are different in practice, theory, and design, and should not be lumped in the same analysis even if they both ultimately might negatively impact communities of color.

Second, technologies like HunchLab do not blindly follow the data. Instead, they recognize — reflecting the op-ed’s concern — that sometimes the policing remedy does more harm than good. So, in response to a concern about over-policing, HunchLab might re-weight the severity of crimes in their predictive models to avoid policing in an aggressive and unthinking manner. For example, if certain crimes are deemed less threatening to community order, HunchLab recommends less deterrence-based policing in those areas to avoid unnecessary police-citizen contact. The company specifically tweaks the model to avoid unnecessary contact that might increase racial tension. Not all systems do this, and some racially discriminatory impacts will remain, but HunchLab — which was singled out — does attempt to avoid the problem.

I am not minimizing the risks of predictive policing technologies on creating new forms of racial bias. In fact, I have made a scholarly career making similar critical arguments — and just wrote a book — The Rise of Big Data Policing: Surveillance, Race, and the Future of Law Enforcement –that explains how race is a central problem with these new technologies. But, my point is that if you are going to criticize racial bias in predictive policing, you have to do it on the merits of the where the dangers actually lie. In my mind, the more immediate focus of concern should be the Chicago Police Department’s “heat list.” The heat list disproportionately includes men of color in its ever-expanding listpartially because the algorithm uses arrests as the inputs (which again, are more of a function of policing practices than crime realities).

I am also not minimizing the genuine fear that generated Ms. Williams’ op-ed. As anyone who has studied the history of policing in America knows, surveillance technologies tend to be used against communities of color first and in a disproportionate manner. Georgetown University Law School recently hosted a conference on the “color of surveillance” (the “color” unsurprisingly is predominantly black and brown). This reality must shape our critical reaction to any new technology which risks replicating old biases.

In the final analysis, op-eds and news stories about the dangers of racial bias in predictive technologies may be helpful (even when not completely precise), because they generate a societal fear that the companies will respond to through their products. If citizens react to the potential problems, the technology companies can respond and offer ways to solve those problems. In fact, new entrants into the predictive policing field have begun advertising themselves as offering solutions to the problem of racial bias in the data.

But, the danger of creating fear, without engaging the complexity of each technology, means that otherwise worthy arguments can be too easily defeated. Honest conversations are hard. Nuanced debates are less “headline worthy.” But, the future of policing depends on truthfully examining the technologies with an eye toward improving the capabilities and not demonizing the companies (unless they actually deserve it).

Racial bias is a real and future concern for predictive policing, but we need to be thoughtful in our criticisms and precise in our analysis. The future of big data policing depends on it.