The Danger of Automating Criminal Justice

Advocates in Philadelphia say a new tool to assist judges in sentencing could perpetuate bias.

On a recent Wednesday afternoon, activists and attorneys packed a Philadelphia courtroom. But they weren’t there to support a defendant or oppose a ruling—they were questioning the use of a new tool designed to aid judges in sentencing.

Over the course of three hours, 25 people testified during the public comment period against a risk assessment instrument proposed by the state’s Commission on Sentencing. All 25 argued that the tool, based on an algorithm, would perpetuate racial bias in criminal sentencing. A similar hearing was held a week later in Pennsylvania’s capital, Harrisburg, and again there were no supporters among those who testified.

“The fundamental problem is that the tool doesn’t actually predict the individual’s behavior at all,” said Mark Houldin, policy director at the Defender Association of Philadelphia. “It predicts law enforcement behavior.” He continued: “As we know, [arrest rates] can depend on people’s race and the neighborhoods that are targeted by police activity.”

Predictive algorithms have been used in the court system for years, most prominently in decisions around pretrial detention. One such tool developed by the Laura and John Arnold Foundation is used in at least 29 jurisdictions, including three entire states. A smaller number of jurisdictions use risk assessment at sentencing—roughly 13 in all—and Pennsylvania is poised to follow suit.

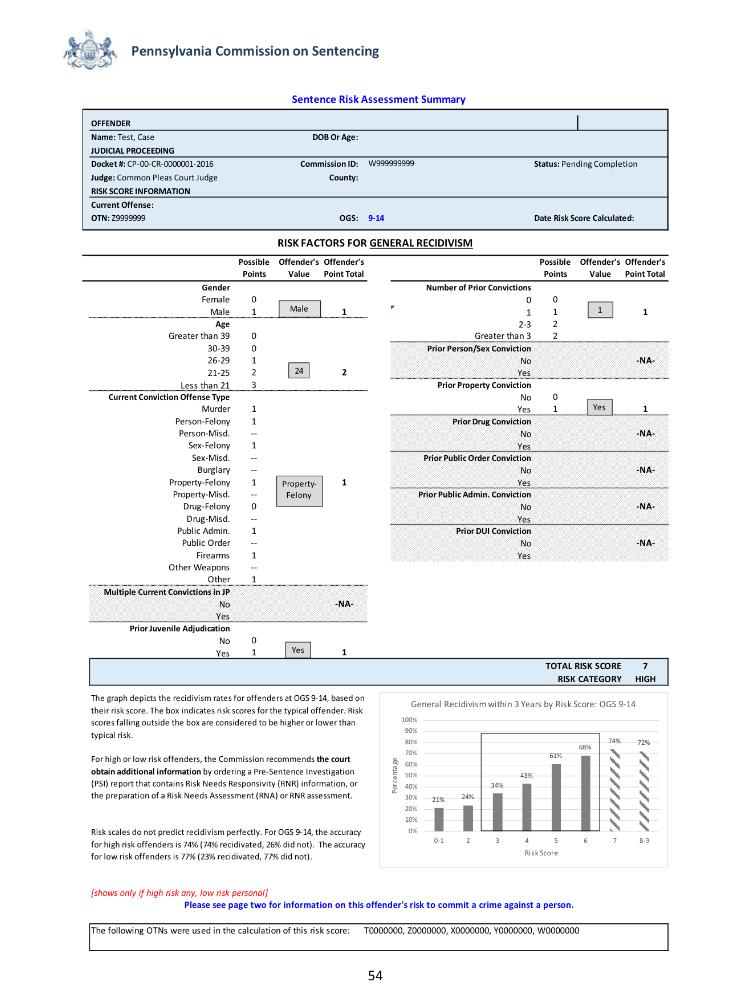

The instrument being discussed in Pennsylvania is essentially a scorecard of factors including age, gender, number and type of both prior and current convictions, along with juvenile run-ins with the law, all of which are used to generate a score. Theoretically, the score rates a person’s likelihood of recidivism three years after being sentenced to probation, or following incarceration.

Supporters say such tools can counter human biases in sentencing by using data instead, reducing the impact of a racist judge, for instance, by giving him or her a more objective source of information. Ultimately, that could help reduce prison populations without threatening public safety, they say. But opponents believe discrimination is baked into the data, and perpetuated with the backing of “science.”

Adam Gelb, director of the Public Safety Performance Project at the Pew Charitable Trusts, is a supporter. “Proponents of the tools argue—with good evidence—that when they are well-designed and well-implemented, the tools improve the accuracy of the diagnoses and responses [by judges], and improve consistency and transparency in decisions that are being made,” he said. But he acknowledges critics’ concerns. “There is such extensive evidence now of racial biases in other parts of the system that lawyers and advocates have rightly recognized that risk tools are an area where bias could be creeping in as well, especially as their use grows.”

The debate has come to a head in Pennsylvania. At a hearing on the risk assessment last year, only three people testified to a nearly empty room; this time, the room was full. Houldin attributes the surge of participation, in part, to the overall push for criminal justice reform in Philadelphia, sped by the election of District Attorney Larry Krasner last fall. “We’re at a point where people in Philadelphia aren’t just realizing that there is a problem but demanding that there is a solution,” he said. Many of the prominent organizers who propelled Krasner into office were also in the room to watch or to testify on the sentencing tool, as were two high-ranking members of his office.

Barring a legislative change, the implementation of a sentencing risk assessment tool is inevitable in Pennsylvania because of a 2010 law that mandated courts to do so. The law, which passed without much fanfare, was part of a package of reforms to reduce the state’s prison population. The intent of the statute was to aid judges in determining which offenders could be safely diverted to community supervision or residential treatment programs, said Mark Bergstrom, the state Commission on Sentencing’s executive director.

The commission, which is responsible for developing the proposed tool, was supposed to vote this week on whether or not to adopt the current version. But after the dramatic public hearing, the vote was postponed until December. This window will provide “an opportunity where we can ask those who have been critical to present proposals they have for an alternative approach,” Bergstrom said.

He thinks the tool has been unfairly criticized by people who think it’s meant to dictate a sentence. “It’s not about more punishment or different punishment,” he said. “It’s about looking at what kind of needs the offender has.” A closer look at someone who scores very high, for example, could reveal a history of substance dependency which would be exacerbated by time in prison. In theory, the judge would use that information to recommend an alternative such as residential addiction treatment.

But critics argue that in practice it could lead to an opposite outcome. Just labeling a person high-risk inherently clouds a judge’s perception of that person and “will impact sentences greatly,” Houldin said.

Opponents are particularly concerned about the fact that the tool uses prior arrests in its definition of recidivism. Critics say that low-income and minority neighborhoods are often over-policed, so people who live there are more likely to have been arrested—even if never convicted. By the commission’s own analysis, the instrument predicts that Black offenders are a full 11 percentage points more likely to reoffend than are their white counterparts.

Bergstrom said that he appreciated the concern for using number of arrests but said, “I’m not sure how we can develop an instrument, which we’re required to do, if we can’t use criminal justice data or demographic data.”

This gets to the core of the argument against these tools. “We are not eliminating bias from the decision making, we’re embedding it even deeper,” said Hannah Jane Sassaman, a Soros Justice Fellow studying risk assessment. “It’s really important for jurisdictions to go through the extraordinary culture change,” to create a more equitable justice system, Sassaman says. She fears that predictive instruments will give jurisdictions political cover to seem like they’re making changes without truly reforming.

Rather than trying to identify high- and low- risk defendants, Sassaman suggests, every defendant’s history should be considered in court. “We need to be encouraging judges, and giving them more resources, to ask about a person’s humanity,” she said. Pre-sentencing investigations “should be whole and complete.” This would enable a judge to consider in every case whether prison time is an appropriate sanction.

Nationally, there is scant research on how effective predictive algorithms are at reducing prison and jail populations. While the implementation of such tools are often heralded as advances, they may do little in practice. One of the few published studies examined Kentucky’s use of a pretrial risk assessment tool. It found that there was only a small increase in pretrial release, and that over time “judges returned to their previous habits.” Within a couple of years, the study found, the pretrial release rates in Kentucky were actually lower than they were before the tool was implemented, and lower than the national average.

Douglas Marlowe, chief of science, law, and policy at the National Association of Drug Court Professionals, trains judges on how to use predictive tools. In his view, one of the biggest problems with the tools comes down to semantics. “Risk, need, responsivity. These are the least understood terms in the criminal justice system. You won’t get five people to agree on the definition,” he said. When a judge sees that a person is “high-risk” or “high-need,” his or her reaction could be to give the person a longer sentence. But, Marlowe explains, depending on the individual circumstance, this could be the polar opposite of what the score indicates. “High-risk could mean that this person really needs residential treatment, and not prison at all,” he said. He suggested that practitioners “strike the word ‘risk’ from the criminal justice lexicon and replace it with ‘prognosis.’”

Marlowe sees the use of algorithms in the criminal justice system as inevitable, which he considers a positive development. But, he cautions, judges need to be rigorously trained and their conduct must be scrutinized. “You don’t just throw a tool at a problem and then move on,” he said. Using an analogy from the medical field, he added: “A scalpel in the hands of a layman is a knife.”