The Appeal Podcast: The Risks of Risk Assessment

With Hannah Sassaman and Matt Henry

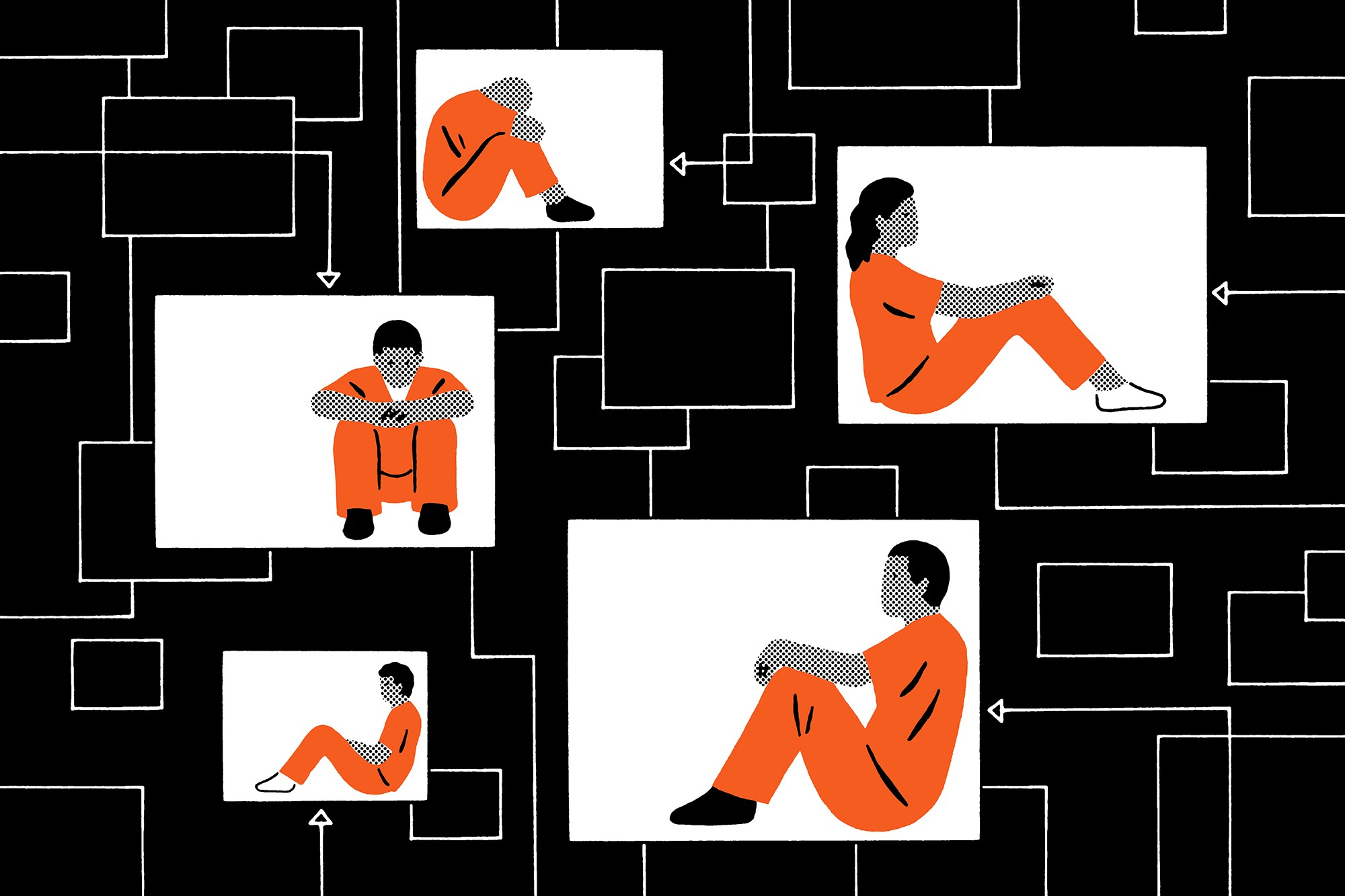

As more and more states seek to abandon cash bail, a system widely seen as unjust and discriminatory, a question has emerged: What should replace it? Increasingly, the answer involves some sort of “risk assessment”—tools designed to predict an arrestee’s likelihood of fleeing prosecution or committing another crime. They’re also being used in sentencing and parole decisions. Today we are joined by Hannah Sassaman, policy director of Media Mobilizing Project, and Matt Henry, chief technologist and legal counsel at The Justice Collaborative, to discuss this notoriously fraught topic and why relying on algorithms doesn’t ensure justice.

The Appeal is available on iTunes and LibSyn RSS. You can also check us out on Twitter.

Transcript

Adam: Hi, welcome to The Appeal podcast. I’m your host Adam Johnson. This is a podcast on criminal justice reform, abolition and everything in between. Remember, you can always follow us on Facebook and Twitter at The Appeal magazine’s main Facebook and Twitter page and as always you can find us on iTunes where you can rate and subscribe to us and if you like the show and you haven’t rated or left a review, please do. That would be much appreciated.

The movement to end cash bail, a system that’s broadly seen now as racist and anti poor, has become more and more mainstream. But with this mainstream appeal comes to the next logical question: what replaces the system? Filling that void increasingly is what’s generally called “risk assessment,” a catch all term to describe a host of tools, typically algorithms designed to predict an arrestees likelihood of missing a court date or fleeing prosecution. Today we are joined by policy director of Media Mobilizing Project and Appeal contributor, Hannah Sassaman and Appeal writer Matt Henry to discuss this notoriously fraught topic and to break down racial disparities, misconceptions and the overall risk of risk assessment.

[Begin Clip]

Hannah Sassaman: The data of that risk assessments are drawing from is racist at its root because we’re taking a look at a practice of criminal justice, a practice of policing, a practice of redlining in terms of housing, divestment from education, divestment from jobs, from transportation. Many, many historic wars against poor folks and folks of color, the data that trains these tools and the data points that they’re trying to predict are based in a practice of criminal justice that over criminalizes black and brown people.

Matt Henry: And the way that predictive models work is they assume that the future is going to be like the past in important ways. So if the past involves things like racist policing, you know, racist courts, if the model assumes that the future’s going to be like the past and it’s in the present, then we’re just going to continue to perpetuate those things unless we build models in a way that is really intentional about mitigating those harms, if that’s even possible.

[End Clip]

Adam: Thank you so much for joining us.

Hannah Sassaman: Hey, no problem. Thank you so much for having me.

Matt Henry: Thanks for having me.

Adam: Okay. So y’all wrote a risk assessment explainer for The Appeal, which was incredibly helpful and I thought it would be a great starting point to have a conversation about the minutia because the latest trend in criminal justice is bail reform, cash bail reform, and the most pressing issue that’s the logical implication of that is what’s generally called risk assessment, which is considered an alternative to cash bill. Supposedly, at least in theory, it’s supposed to be less discriminatory, anti poor and anti people of color. You write that there are different types of risk assessment historically. There’s clinical, actuarial, and algorithmic. For those listening at home who are totally new to this concept can give us a breakdown in general terms of what risk assessment is historically, what it is in its current iteration and what, what is sort of the most popular version of it?

Matt Henry: Sure. Um, so risk assessment as a practice is nothing really new, right? In the states it dates back to the 1930s or so. In the parole context, but that was very much what we would consider a clinical risk assessment, which involved, you know, sort of an expert making judgements based on clinical opinion, you know, so there’s some learned opinion that goes into it. And then in the eighties and nineties it shifted from being less about one person’s judgment to, you know, this actuarial model where we’re talking about data and making statistical projections, you know, based on group data. And in the more recent iteration that’s the actuarial has sort of become the algorithmic where those calculations are performed by computers. And so that’s kind of where we’re at now.

Hannah Sassaman: Yeah, so risk assessment, if you’re coming from the perspective of someone who lives in a city like Philadelphia, like I do, which is the poorest big city in the United States, it’s also a majority minority city. More than half of the folks here are black, brown, come from an immigrant background. What it means is that the data and that the clinical to actuarial to algorithmic definition is exactly right. But the data that feeds all of those different things, whether it’s, you know, a bail magistrate like looking you up and down and deciding whether to set you a heavy money bail or incarcerate you pretrial or let you go or if it’s a series of tables where you give one or two points, two has a previous arrest, three points to doesn’t have a fixed address, two more points to struggles with mental health issues or whether it’s many, many different factors that might be weighted in so many different ways and take a different path through the data for any particular individual person, like the same kind of algorithms that sell you meal plans on Facebook or things like that. Whether it’s any of those different things, the data that risk assessments are drawing from is racist at its root because we’re taking a look at a practice of criminal justice, a practice of policing, a practice of redlining in terms of housing, divestment from education, divestment from jobs, from transportation, many, many historic wars against poor folks and folks of color, the data that trains these tools and the data points that they’re trying to predict are based in a practice of criminal justice that over criminalizes black and brown people. So what we’re taking a look at is, as Michelle Alexander said in her Op-Ed earlier in 2019 called “The Newest Jim Crow,” it’s another form of racial profiling. The question that I think a lot of jurisdictions are trying to answer is whether or not risk assessment, whether it’s when it’s guiding a judge or a bail magistrate or it’s guiding another high stakes decision maker, is it more or less racist and the decision maker and what is the role of communities in trying to have power over or answer questions when you take that practice of criminal justice and you slap science at the top of it and you put it into the highest stakes decision that can happen to you, whether or not you are free or in jail?

Adam: Right. So there’s this racist component to it and people are generally quite mystical and deferential to this notion of algorithms. I think of New York activist Josmar Trujillo who once told me, he said predictive policing, which isn’t the same thing, but it’s similar kind of hype around it, was kind of stop-and-frisk 2.0 for kind of wary liberals. That if you say something’s an algorithm, if you put an algorithm on it, it gives it a sense that it operates outside of moral decision making that it’s, ‘oh, we can’t do anything about it it’s just this this Oz-like computer that gives us information from below,’ but you write that “Despite the talismanic manner in which people deploy the word “algorithm,” nearly every aspect of such decision-making is ultimately dependent on human ingenuity (or lack thereof). Algorithms are designed by humans, run on computers that humans built, trained on data that humans collect, and evaluated based on how well they reflect human priorities and values.” Unquote. To what extent are risk assessment tools in your opinion, in a sense, a way of sort of shifting moral decisions onto this nebulous computer and then therefore we’re not racist, the computer’s racist?

Matt Henry: Right. I think that’s sort of the danger in calling risk assessments a reform tactic, right? I mean, one of the reasons that algorithmic risk assessments have become so popular is because of the sort of criminal justice reform movement. You know, bail reform in California meant implementing risk assessment. Part of the First Step Act that was just passed federally involved creating a risk assessment. And so using the rubric of reform to implement these tools is problematic. And as much as, you know, Hannah was saying earlier, the data that go into these models come from sort of a pre reform era. And the way that predictive models work is they assume that the future is going to be like the past in important ways. So if the past involves things like racist policing, you know, racist courts, if we assume that the future is going to be like the present, if the model assumes the future’s going to be like the past and the present, then we’re just going to continue to perpetuate those things unless we build models in a way that is really intentional about mitigating those harms. If that’s even possible.

Hannah Sassaman: Right. Uh, I think that that’s a really good point. There was a great paper that was written by some of the principles and lead researchers at this think tank down in DC called Upturn, um, and it’s called “Danger Ahead” and it thinks really closely about sort of the systems change that you were just bringing up. Right? So if you take a look at New Jersey, which has had a large amount of decarceration as well as almost elimination of the use of money bail since the statute signed by then Governor Christie was placed into effect, they do use a risk assessment there, but they’re also, and anyone who was involved that systems change, whether it’s someone at the court administration level or one of the extraordinary organizers or activists that helped to push to make it happen, including the Drug Policy Alliance, including the ACLU of New Jersey and many other groups, there was major, major culture change. I remember talking to Alexander Shalom, who, one of the senior, uh, councils at the ACLU of New Jersey and he noted to me that even when they just started talking about bail reform and started pushing the legislation, well before any major changes were put into effect, the judge’s already stopped in many different cases using money bail as incarceration. So, um, what Upturn brings up is the idea of zombie predictions, right? Where you have an extraordinary amount of data taken from probably the height of stop-and-frisk in, you know, along the east coast or many other extraordinarily racist practices of, you know, criminal justice. And that’s what’s trying to help you answer your question of whether or not, and something we should have said earlier, risk assessments generally answer one of three questions or more than one. They answer whether or not you will FTA or failed to appear. Will a person come back to court safely if released? They also try to answer a question depending on the statute of your state. This is not the case in New York or in Massachusetts where you can’t test for this, but they’re also trying to test for something called dangerousness.

Adam: (Laughs.) That’s not dystopian at all. What, what is your essential dangerousness?

Hannah Sassaman: Well, it’s true, right? So they’re trying to guess whether or not, the way it’s defined, the way that it’s sold in the literature, as they say, will you recidivate again, pretrial? But I think it’s worth discussing because actually what we, we’re not trying to predict the behavior of an accused person at all in that case, we’re trying to predict the behavior of the police. Will they arrest you again? And so we get to this place basically where we’re asking these major fundamental questions, what is the purpose of jail? What is the purpose of bail? And so if you’re trying to, and I’m not saying that, I have been told multiple times, and I completely understand, that it’s dangerous to say that we want to replace bail with risk assessment and believe me, we don’t want to do that. And that’s not what we’re fighting for. But legislators, Kamala Harris tweeted that in April of 2018. Major decision makers use that as a shorthand despite the fact that people on all sides of this issue say that we’re never supposed to use a risk assessment in lieu of a decision by a judge or in lieu of a bail decision. It’s not supposed to be swapping out one for the other, but we get to this place where, because the culture change of a particular jurisdiction, because the resources on the table at that jurisdiction, to truly understand what this actuarial science means might be low while they’re dealing with the massive bureaucracy of trying to reform a system that has been the way it is for decades, if not centuries, they might not understand that you’re not supposed to use a risk assessment tool, for example, to lock someone up. They might not understand that.

Adam: So let’s drill down into specifics here because I think some people listening may say, okay, well risk assessment is racist because the criminal justice system’s racist. Let’s talk about specifically why that is. And I want to touch on California Senator Kamala Harris and presidential candidates’ reliance on risk assessment later cause I do think that’s important. A lot of this is becoming, Bernie Sanders is doing this as well. A lot of this is becoming very buzzwordy, so we’ll talk about that later. But first let’s talk about what we mean when we talk about racist criteria or criteria that will necessarily discriminate. Two of them are likelihood of rearrest and likelihood of being able to return to court for court dates. Now of course, arrest has zero correlation or zero relation to actual objective criminality. As we all know, right? Certain neighborhoods are over policed. Police aren’t going around to gated communities and seeing who’s smoking weed in their house. That necessarily if you use the likelihood of arrest as a criteria, you’ve already committed a, a massive racial infraction. So for those who sort of maybe hear racist policing and kind of roll their eyes for whatever reason, let’s really talk about what that means manifestly in terms of what the input is. Uh, in terms of court appearances, which obviously discriminates against people who can’t afford childcare, who can’t afford to leave their jobs. So let’s get into some of that.

Matt Henry: Yeah, no, I think that’s exactly right. I mean, when you talk about failure to appear, um, you know, there are a million reasons why somebody doesn’t come to court. Childcare as you said. And a lot of times these tools are using things like likelihood of arrest to predict things like failure to appear. You know what I mean? If it’s a bail, if it’s a pretrial risk assessment, sometimes we’re using likelihood of rearrests in the future as a proxy for likelihood of coming to court because the likelihood of somebody coming to court, it’s something that’s really hard to measure. So we’re using these things, these really inapt sort of comparisons, between likelihood of getting arrested, which again, is problematic in all the ways that we’ve been talking about to predict this other thing that’s really largely unrelated and occurs over a time horizon that’s really unhelpful in predicting the likelihood of somebody coming to court. Court cases for like a district court misdemeanor case, you know, these things typically resolve, at least in my experience in a few months to a year. But when we’re talking about recidivism being risk of rearrest over the course of like two years, like obviously that time horizon just doesn’t work. So there is a mismatch between what these things are predicting and how they’re being used.

Hannah Sassaman: Right. And so if you take a look, for example at the Arnold Ventures, the John and Laura Arnold Foundation pretrial safety assessment and take a look at the different factors in there. I think to their great credit, they don’t use arrest as a factor because as we’ve already just discussed the extraordinarily bias in the over policing of poor black, brown, queer, immigrant folks, right? But conviction is heavily used both in its predictive calculations for failure to appear for what they call new criminal activity, which is will you get arrested again if you’re released? And new violent criminal activity, which means will you get arrested again on a violent charge? And conviction is weighted in all of those, any prior conviction. And as we know in the United States, well over 90 percent of convictions are pled. So you might have someone who, I was working directly with an old colleague of mine earlier in March of this year, in March of 2019, Angela Barnes, and Angela’s husband, Jonathan he had been charged with a crime. He was locked up pretrial. He had had some things going on in his past. He couldn’t afford his bail. So he took a plea and at that plea, he was assigned three years of probation. Later he was, you know, trying to, he, he lost all of the work that he had at that time. He had a bunch of pickup jobs doing, you know, laboring construction kinds of stuff. He tried to apply for a good steady job for his family at SEPTA, which is the, uh, the bus company, the Public Transit Company here in Southeastern Pennsylvania. And they offered him the job, but then when they saw he was still serving his sentence of active supervision, active probation, he lost that job. He wasn’t able to get it. And so that conviction, just a risk assessment had been applied to him. The previous conviction that he had would have been counted against him. In fact, that he had to take a plea, he was pushed to take a plea because he needed to go home. He needed to get out of that jail cell. So that means that you’re dangerous forever according to one of those tools. And as we know, the cost of bail, the cost of pretrial incarceration is much, much heavier for black and brown folks. And they are a lot more likely to take a plea. And you can see the evidence of this. If you take a look at racial disparity, um, incarceration rates in Pennsylvania at the Prison Policy Initiative, if you take a look at their chart of racial disparities, um, at least from 2010 and they’ve been updating these numbers for every one white person in the Pennsylvania jails, there are ten black people. And so we say that this data is objective. We say it’s science, but if we’d go down into the individual story of any of these folks, you hear the history of racial disparities and suffering that don’t actually give us what we want. It’s not actually making our communities safer to lock more people up pretrial. It’s not actually making our community safer to apply a sentencing tool rather than an individualized conversation at sentencing, which is on the table also in the state of Pennsylvania and so we’re at this place basically where we also have to get back to the conversation, especially for pretrial, we have to get back to the conversation of what we really need to keep us safe and what people around the United States from Civil Rights Corps to the ACLU to incredible formerly incarcerated people’s groups like Just Leadership USA and many others are fighting for is a true individualized arraignment. So if I am accused of a crime, I don’t just get two minutes in front of a closed circuit TV with a bail magistrate, which is, by the way, that’s exactly what happens right now in the city of Philadelphia. Instead, I get a lot of time with a lawyer next to me arguing for my freedom as an individual. And instead of applying risk assessment, we can just assume that the vast majority of folks, obviously there’s always going to be the opportunity for a DA to say, ‘I really want to have a fight about this particular person,’ but we can assume that the vast majority of folks can just go home and we don’t need predict their failure to appear. Instead, if someone is in an active drug addiction, if they’re struggling with childcare, if they’re struggling to take care of a disabled mother or grandmother, if they simply can’t miss a day of work, we can meet those folks needs. We can send them a text message like Jacob’s Sills and Uptrust do. Like my dentist does reminding me to come in tomorrow. Like we’re trying to calculate things that don’t actually matter to public safety. And what we’re doing is, is we’re potentially putting and there’s a few other things I want to talk about, we’re potentially putting a tool that can not just vastly increase racial disparities and other forms of, you know, oppression in our society, in a computer, into a system, but we’re also not actually always decarcerating when we use risk assessment, right? So New Jersey has used risk assessment as a part of decarceration. So has, you know, the city of DC, which has had risk assessment for a large amount of time. If you actually study cities like Spokane, Washington, which put a risk assessment into place and that was a part of increasing pretrial incarceration by 10 percent.

Adam: Right.

Hannah Sassaman: If you take a look at Megan Stevenson’s study assessing risk assessment in action of Kentucky, which has had risk assessment at play for quite a long time, they um, she found, and this is one of the first independent studies of risk assessment, not actually paid for by the same folks who build these tools.

Adam: Right.

Hannah Sassaman: If you take a look at the state of Kentucky, for about six months, we found the judges there, she found the judges there, both stopping a lot of use of money bail and decarcerating, sending more people home. But after those six months they just returned to parity. They returned to their previous setting of incarceration or not. She even found that if you take a look at rural counties of Kentucky where the judge is most likely white and the person accused of harm is also most likely white and if the risk assessment said to send them home, they were more likely to do it. But if you were in an urban county of Kentucky where the judge is most likely white and the person accused is considerably more likely of being an immigrant, black, brown, a refugee, the judge would more regularly buck the recommendation of the tool even having to fill out, you know, some relatively honorous paperwork in order to put them in jail pretrial. So again, what we’re talking about is do we need a computer that has racist data training it up to do what is constitutionally required, which is to give anyone that you want to lock up pretrial a truly spirited ability to fight for their freedom and not use bail. And the fact that most people can’t afford it as tantamount to pretrial incarceration.

Adam: Right. Because the general rule is, and this, this gets to the Kamala Harris Bill, obviously she had a history as a prosecutor, is trying to sort of rebrand herself in the run up to 2020 as a quote unquote “reformer” — whatever that means — but any reliance on risk assessment that’s untethered or decoupled from a decarceration strategy with actual goals, actual sort of targets is at best useless and at worst somewhat sinister, right? Because you’re sort of just repackaging the same thing.

Hannah Sassaman: I think that that’s totally right and that’s why honestly like extraordinarily vibrant movements across this country that are fighting for their freedom and their working for an end to mass incarceration put out a major statement in July of 2018 about risk assessment because this was popping up everywhere. So well over a hundred groups from the Leadership Conference on Civil and Human Rights to the NAACP, to the ACLU, to the National Council of Churches and a wide variety of local, state and national groups all signed on to a statement that at the top of the document it says “We believe that jurisdiction should work to end secured money bail and decarcerate most accused people pretrial without the use of risk assessment instruments.” It says that, but then it also goes deeply into the fact that we just have dozens of these at play already around the country from San Francisco to Pittsburgh. These are everywhere. And so we go deeply into the kinds of checks and balances that we think communities should get to have if a tool that has such extraordinary problems is to be placed into a pretrial decision making context. So one of the things that it says, the first principle, and you can go to civilrights.org and just look for pretrial risk assessments to read the statement, but it says that pretrial risk assessment instruments have to be designed and implemented in ways that reduce and ultimately eliminate racial bias in the system and that they have to be decarcerating. They have to be sending people home. The Arnold Foundation says that too. They, the Arnold Foundation in March of this year, had a big launch of more funding that they’re putting out into pretrial reform as well as testing out their PSA, their risk assessment tool in more jurisdictions. And they say in their principles of pretrial that these should only be used in a decarceral way. And that we need to make sure that communities get to actually input and be part of testing whether or not they’re truly sending people home and reducing those disparities. Uh, Cathy O’Neil, who famously wrote the book Weapons of Math Destruction talks about how when we care about algorithmic decision making, it’s not just about what these tools say, not just about the bias in them and how they communicate that bias, but what they do. We have to audit the use of these tools for the impact they have in the systems. Um, and so that’s something that communities have in Philadelphia and around the country are fighting for. And we have a variety of other different principles and that document about how we can reduce the potential harms of them to make sure we’re meeting that goal of everyone getting real pretrial individual justice and vastly reducing the hundreds of thousands of people who are held pretrial in our jails.

Matt Henry: Right. And so many of these models were built to connect people who have needs with services. Right? I mean, part of this was about rehabilitation back in the day, at least in the eighties and nineties, these models were developed to be part of what was supposed to be a healing rehabilitative process and are now being put to use in this like extremely carceral way. So one of the things that could be done to sort of mitigate this is to say, you know as Hannah was saying, like don’t use this in a carceral way. Don’t use this to put people in jail. If we can’t get rid of the bias, you know, Sandra Mason, a law professor who’s written a lot about risk assessments, her solution is not this is the end all be all but if we can’t eradicate the bias, we can at least use risk assessments in the way that these models were developed to connect people who have needs with services that can help address those needs.

Hannah Sassaman: That’s right. Although I’ll also note, and you know, the, the Movement for Black Lives policy table and Law for Black Lives are working convening a bunch of incredible local community leaders to think about what needs assessment might mean. I just want to reiterate that in many jurisdictions risk assessment is applied without actually meeting folks’ needs or when a pretrial services division, which is often housed in the same building and staffed by the same people as the probation department, so it’s really like having a PO watching you. It’s like sort of pretrial probation. They’re assigning levels of supports that can be very burdensome. Electronic monitoring is assigned regularly, um, as a part of the implementation of risk assessment in Cook County for example, which famously had a major injunction stopping a lot of the use of money bail and has had other decarceral pushes coming from their progressive prosecutor, Kim Foxx. So we have to be careful about over conditioning, about net widening, about putting more burdens on people who really don’t need anything to come back to court in the vast majority of cases. We have an over 90 percent return to court rate here in Philadelphia, and our folks are dealing with major problems.

Adam: And the ones who don’t return to court are not necessarily going out and-

Hannah Sassaman: They’re not putting on their cowboy hat and going to the unincorporated territories.

Adam: Right. (Chuckles.) It seems like so much of this conversation dances around the fundamental ideology of what we’re really talking about because this sort of rests in a kind of academic setting for the most part, I think people really aren’t talking about what the underlying, you know, so if let’s say for example, I’m a frustrated liberal who’s not a radical leftist, not an abolitionist, sort of vaguely is on the kind of more liberal wing of reform side. And I don’t, I don’t necessarily say that as a pejorative, but just that’s, you know, sort of maybe someone listening to this is, ‘okay, well we can’t have cash bail. We can’t have risk assessment. Blue sky. What is the alternative if I arrest someone who’s convicted of 17 rapes?’ Or whatever sort of scaremongering straw man they wished to hold up.

Hannah Sassaman: So I think that the real goal like, I’m a mom, I’m a mom of two little girls and I think the real goal for you, my frustrated liberal friend with your latte and your New York Times and your tote bag and your sneakers and whatnot, like my real goal for you is to imagine yourself in front of a magistrate who is not even a lawyer, let alone a judge, fleecing the absolute worst day of your life and think about who you are and your full human story and what you would like to say right then so you don’t lose your kids because you’re a single parent. Or so you don’t lose your job because you’ve already spent all your paternity or maternity leave. Because the same factors and features which face low income folks, primarily folks of color in this country, we all face them, you know, in different ways and you get to do, you would like the right to tell your human story, right? And so when you bring up the straw man of, you know, a person who’s committed an absolutely heinous set of acts of deep, deep abuse. I do want a world where we can restore our relationships and deal with harm in ways that don’t involve cages. And I want to build that world with all of you. But while we’re working towards building that world, if the prosecutor and the judge truly want to incarcerate that person or to incarcerate you, if you have committed those acts allegedly, pretrial, then you should get the right to tell your story with an attorney who has been able to collect evidence, who can do discovery, who can really fight for your freedom the same way that he or they or she would at any other part, at any other part of the judicial process. Right? And so what we’re not, we’re not talking about and for when we’re talking about restricting risk assessment and many folks are pure abolitionists on risk assessment and I fully valorize and support that as a strategy. I think Human Rights Watch, I know the work of John Raphling does that extraordinarily well, um, as well as many other groups around the country. But if we’re in a place where we have to have risk assessment, we should use it to send the vast majority of people home. We should take things that are misdemeanors where you might spend a few days waiting for, you know, your court date, you should get a criminal summons and then go right home and show up when it’s your, your date for your arraignment. So we can clear those folks out. Then anyone who’s got a serious charge on them gets to have that full adversarial court hearing that you would want, Mr. Liberal, when you were there.

Matt Henry: Right and I think, you know, the history of bail reform is littered with good intentions and even good results that start out positively. And then over time, you know, sort of devolve into, you know, just more pro-carceral systems. In Massachusetts where I used to practice, um, you know, there’s a presumption of personal recognizance in cases. So basically you’re supposed to just get out on your promise to come back to court, you know, unless, and then here’s where the caveats start piling up. And over time, the caveats that get added because of dangerousness, because of particular charges that warrant being held without bail, the exceptions come to swallow the rule, right? And then those exceptions start out as exceptions and then they sort of take over and it stops being ‘okay we have to have a hearing, a full hearing about whether this exception applies’ and it really just becomes a box that a judge checks, you know, on your arraignment date and then you’re held without bail for some amount of time.

Hannah Sassaman: Yeah. Yeah. I met with um, some incredible public defenders when they were gathering in Philadelphia, uh, like national public defenders and one of the chiefs stood up in the room and he said, my attorneys tell me that they come back and they say that the judge held the risk assessment score over his head and would it, say, ‘council, I have to lock up your guy because the computer says he’s scary.’ Like, so what we’re talking, I think that the idea of good intentions really, really matters here. People are trying to wean the judiciary of these United States off of the drug that is money bail. I get it. I see it. I understand that judges, whether elected or not, feel the extraordinary weight of letting someone go who then turns around and is allegedly committing a heinous, heinous act.

Adam: Something by the way, that’s been demagogued in tabloids here in Chicago and The New York Post does this in New York as well. They’ll sort of, they actively have police blogs that will seek out, for those who don’t know, people who are out on cash bail or specifically those who were released by cash bail reform organizations and they, they basically stalk them on social media and try to find them so they can pass that along to right-wing media. So this is a real risk.

Hannah Sassaman: Absolutely. And we haven’t talked about, by the way, the people probably funding that, which is the bail bonds industry, which takes a look at the advent of risk assessment as a, you know, threat to their entire survival, um, in many cases. And the bail bonds industry takes a look at the principled resistance of the civil rights movement in these United States as somehow being in alignment with the preservation of money bail, which it absolutely you know is not, and you can call Vanita Gupta at the Leadership Conference on Civil and Human Rights and she’ll tell you that, but we’re at this place where judges say that they need something. ‘You’re going to take bail away? What are you going to replace it with?’ What we need to do is to look at the facts and look that for the vast majority of people, they will come back to court with nothing or with simple reminders. You should take a look at the incredible work of projects like the National Bail Fund Network, which is a project of the community justice exchange or the National Bail Out Movement that is happening, Mama’s Bail Out all around the United States. And you can see that when neighbors bail out their neighbors and bring them home, just that community support is what brings them back to court. You should look at participatory defense. Philadelphia has two and soon to be three hubs, which is another way of holding folks. When you treat people as people, meet their needs if they have them in a non punitive, not obligatory way, clear out the folks who like even if they were convicted wouldn’t be getting a jail sentence, like clear out the people with misdemeanor charges and other charges and then if you truly want to — Prosecutor, Judge — incarcerate someone pretrial, give them the right to fight for their freedom, give them that fully adversarial hearing the same one who would want.

Adam: Yeah, it’s, it’s a cultural shift away from sort of the default carceral approach to like putting the burden on people to prove why someone should be in jail, not the other way around.

Hannah Sassaman: I think we’re in a place though in society, like I came on March 21st from an incredible press conference that was led by our district attorney, by Larry Krasner, about some new caps that they’re going to be placing in their office on probation and parole. Right? So 37 states in the country have vastly reformed and cap their tails, probation and parole tails at the state level. We have some of the worst, longest, decades long in some cases, probation and parole tails here in the Commonwealth of Pennsylvania. So he’s saying that for his assistant district attorneys, there will be a presumption that if you have a felony charge that you’re not going to have like a supervision tail unless it’s required by law of longer than three years with an average of 18 months for felonies. And then for misdemeanors, you’re not going to have a supervision tail longer than a year with an average of six months across the office. And, you know, when they say that, what they’re saying is is we can stop supervising and watching and testing and setting up for failure most people and just let them be, just let them live their human lives. Now we have to make that same argument in the pretrial space and we have to continue to valorize with the narratives that are coming from people who are leading their own liberation in cities like Chicago, in cities like Philadelphia, in places like Massachusetts, in places like Yuma, Arizona or in Tucson, Arizona, where there’s bail funds that are proving that you can bring someone home and they’re just going to show back up. We need to start valorizing those stories and that research along with the tools that are coming out from these, you know, whether it’s a proprietary company like Equivant used to be Northpointe, which makes COMPAS, whether it’s tools coming from researchers like Dr. Richard Burke who famously said, you know, ‘I could either lock up Luke Skywalker or release Darth Vader, let me know’ whether it’s, you know, tools coming from the Arnold Foundation, which shares a lot of our values. Like we don’t need those. You don’t need those to make sure people can come back.

Adam: For those that don’t know the Arnold Foundation it was started by billionaire hedge fund manager, John Arnold. They have a lot of what I would argue sort of dodgy positions on pension reform and charter schools. I think that there is a bit of a concern about billionaires sort of setting the agenda for a lot of these things and I think are reasonable conversation and one we can’t really talk about now, but before we go, I want to talk about two things. Number one, I think that there’s a sort of intuitive vulgarity to this that I think really offends people on a basic moral level, which is that really what we’re talking about is pre-crime. We’re effectively putting people in jail for a crime that they may commit, and I really think that, at least for me personally, this kind of violates a very intuitive moral sense that we can’t be deterministic about people’s fates. Even if you calibrated a perfectly non-racist predictive metric, which is of course I think pretty much impossible because I think the whole idea of trying to predict people’s fates is inherently racist, but what do you say to that? Is that a part of what you think some of the resistance to risk assessment is that unlike even though cash bail is of course itself deterministic in a more indirect way, that it sort of has a, it has a weird kind of dystopian vibe that we’re being told we’re committing a crime before we’ve done anything wrong?

Matt Henry: Sure. So there’s the pre-crime aspect and then there’s the aspect of you being punished for things that other people did in the past. Right? This is aggregate data. It could be aggregate data from, you know, a long time ago. It could be aggregate data from a place that is wholly unrelated to where you come from, like in another part of the country that has nothing to do with you and that data can be used to put you in jail. So there’s certainly that dystopian aspect of it and in a lot of cases to making it all the more dystopian, you know, if it is a proprietary tool that’s doing this like COMPAS, you might not even know that importance that the factors being used to put you in jail, you know, the relative weights that are being used to the lock you up such that you can’t even challenge it. So you’re sort of being punished for other people’s behavior in this baffling way. And it doesn’t necessarily, you know, and it’s totally opaque to you. Right?

Adam: Right.

Hannah Sassaman: I think that the other thing about algorithms, and I’m going to get a little wonky for a second-

Adam: Please. We want wonky.

Hannah Sassaman: So the way that any predictive algorithm works, and I know I’m going to get some tweets from my buddies in the data science world about how I’m totally messing this up, but algorithms that are meant to predict something are called supervised learning, right? So what we’ve done is we’ve told the tool what we want it to be looking for, like what result we want it to give us and then we find data in its training base, like in these thousands of records, you know, the Arnold tool uses 1.5 million records from all over the country. VPRAI, the Virginia tool, the VPRAI-R is trained on Virginia records, although sometimes applied in places like Shasta County, California pretty far away. They’ve told the tool what to predict for and they look in the training data for things that match that result when they look at the results for those people and then they spit it out. That’s supervised learning. What I think that the communities that I work with, you know, formerly incarcerated folks, low income workers, immigrants from all over Philadelphia and all over the world or all of the country really are looking for is unsupervised learning. We’re not luddites, we don’t hate science. What we want to do is to actually take a look at the data that is in front of us, and not tell him what we think it should say, not tell it what to predict, but actually try to draw correlations and try to see if we can find root causes that haven’t yet been studied. Like what if we found that in an over policed neighborhood that if you put more streetlights, more trash cans, more signs, more gardens, that it vastly and you rehab some of the abandoned properties that it reduced crime. Like what if you found correlations like that? And there’s a, there’s a program looking at that here in Philadelphia now. What if communities had the ability to use algorithmic decision making to watch judges or bail magistrates and see which ones were setting way too high bails or who are bucking the recommendations even of the prosecutors, if the prosecutor was saying this person can go home on their own recognizance? What if we could use tools like this so we could watch the police? But right now the builders of these tools are almost exclusively, you know, white, come from a particular educational background, usually male. The people who buy these tools, who have the access to being in these systems usually have similar backgrounds. Not always in any of these cases. But what we’re saying is, is that the questions that they’re asking and the sort of tautological predictions that they’re delivering, which you know when they’re spit out, they don’t even say that a person is, you know, 11 percent likely of being arrested again on a violent charge. They just say “high”, you know, bright red. And the judge doesn’t even say that they’re, you know, 90 percent likely to come back. What we’re saying is, is that that’s burying the information our communities need to keep us safe.

Adam: This opacity is striking to me and anyone who studies charter schools in depth as I have, you quickly learn that they began with a kind of actual lottery system and then slowly that merged into this algorithmic lottery, which is not a lottery at all actually. Lottery is a total misnomer, which implies randomness. And then a lot of these school districts, Baltimore, New Orleans, they use an algorithm that is completely secret and completely proprietary based on the work of some Harvard economists. That foundation that runs it isn’t a house in Brooklyn. Um, and when you email them and ask them to see like what the criteria is for the algorithm, it’s completely opaque. What schools people end up going to in these communities and they’re at saying, ‘okay, we don’t want people to game it.’ And there’s a similar feature in this, which is that a lot of these algorithms are privatized and there isn’t really any democratic input and they are being kind of shopped out to these private foundations which are accountable to nobody which have no real constituency among the affected community. Can we talk about the risk of privatization? To what extent maybe these systems should be democratized instead of pawned off on these kind of billionaire funded NGOs or private corporations?

Hannah Sassaman: Sure. We can talk about the profit motive a little bit. It brings to mind an extraordinarily tragic story to me, which is that the state of Illinois contracted with a private proprietary for profit company called Eckerd for it’s child welfare algorithm. Right? So this was an algorithm that was applied to try to help, you know, departments of public welfare, social workers to predict whether or not a child would be at risk of injury or death. And what happened was is that the tool in the state of Illinois had a hell of a lot of false positives, meaning that it was identifying primarily black single mothers as being at risk of hurting their kids. And then, you know, dividing families in brutal and often, you know, irrevocably harmful ways. And then it tragically also had a lot of false negatives, meaning that children died and were hurt. And the way that the tool communicated it’s information to the folks, the social workers and the other staff who are meant to try to identify whether or not this harm was happening was in extraordinarily alarming ways. That wasn’t even, that was not helpful for how they were trying to provide these services. And so the fact that these tools proprietary was certainly a big piece of it. What we’re seeing is, is that the same rights to tell our stories and to have individualized treatment are being turned into a source of profit, like the data that COMPAS is trained on that Equivant formally Northpointe, they don’t share that information. But if you take a look at another study that was put out by um, Megan Stevenson and Chris Slobogin taking a look at an application of COMPAS, looking at, I think it was at sentencing, they found that that tool deeply, deeply weighted age, like overweighted age and didn’t communicate that information to the judge because it was proprietary, it couldn’t communicate that information to the judge. And this would be in a situation where the judge might look at age as a mitigating rather than an aggravating factor. Maybe we need to give this kid more support and treatment rather than throw them into prison for a long time where they’re not going to get anything that will make their life better and healthier when they return.

Adam: Okay. One last thing I’d like to ask is what are y’all respectively working on as individuals, together and what can we look forward to in the future?

Matt Henry: Uh, for my part, one of the things that you learn setting risk assessments is that it’s very easy to use numbers to lock people up. But one thing that’s really salient in the history of the American criminal legal system is that it’s really hard to use numbers to prove, to get somebody out of jail or, or, or you know, serious sentencing looking at McCleskey v. Kemp, where it’s basically like ruling out the possibility of using social science to prove bias in the application of the death penalty. So for me, I guess you can look for more work trying to show why this burden shifting exists, why this disparity exists and how we can overcome it.

Adam: Awesome.

Hannah Sassaman: So in January I just finished a Soros Justice Fellowship focusing on all the issues we talked about today about human oversight and accountability of algorithmic decision making in high stakes criminal justice contexts, primarily in pretrial. Since we launched the Statement of Concern last year, there’s been a whole lot of excitement from communities that are either facing, um, the results of litigation where there has been, you know, a mandated end to the cash bail system or a major reform of it because of a lawsuit or there is a law on the table or a proposal for a law or there is, you know, a lot of cooperation happening between criminal justice partners. A lot of folks got funding from the MacArthur Safety and Justice Challenge. We have that funding in Philly. It’s led to a decarceration of our jails by 43 percent and everyone is considering, do we use risk assessment? Or it might be written into the law. So we’re trying to help communities, people who have been directly impacted by jail, by bail, by prison, by harm, to understand what these tools mean and to be able to fight for their own agency if and when they’re going to be applied. And my organization, the Media Mobilizing Project is partnering with the Community Justice Exchange, which I named before, in trying to help support local folks around the country in a few different jurisdictions to take those principles that the Leadership Conference and all of us put out and to put them into practice. So stay tuned.

Adam: Awesome guys, thank you so much. This was fantastic.

Matt Henry: Thanks, Adam.

Hannah Sassaman: It was really great to be with you today.

Adam: Thanks to our guests, Matt Henry and Hannah Sassaman. This has been The Appeal podcast. Remember, you can always follow us on The Appeal magazine’s main Facebook and Twitter page, and as always, you can find us on iTunes where you can rate and subscribe. The show is produced by Florence Barrau-Adams. Production assistant is Trendel Lightburn. Executive producer Sarah Leonard. I’m your host Adam Johnson. Thank you so much. We’ll see you next week.